The government is sitting on a treasure trove of HR data that it does not typically use. There are so many possibilities to use the data to produce actionable...

This column was originally published on Jeff Neal’s blog, ChiefHRO.com, and was republished here with permission from the author.

The government is sitting on a treasure trove of HR data that it does not typically use. For example, agencies have data about performance, and data about where they recruit and what kinds of questions they ask in job announcements. I do not know of a single agency that is comparing the questions they ask to the performance they get from the selectees. There are so many possibilities to use the data to produce actionable information that would help agencies do better hiring, get better performance, and use their resources more wisely.

I usually write everything in my blog, but I believe analytics are crucial to any efforts to improve federal hiring, performance and virtually everything else. However, I am not an expert on analytics, so I did not feel qualified to write about specifics on how to do it. My colleague Michael (Whit) Whitaker is an expert on analytics, so I asked him to write a guest blog post on the use of analytics and what agencies should be looking for when they think about analytics expertise.

-Jeff

As mission leaders and human resources officers consider how best to meet the analytics needs of their organization to carry out their missions, it may be worth reconsidering the relative priority of hiring elusive and expensive data scientists. The term “data scientist” and the frenzy of the hiring market around their skills is as strong as ever as organizations seek to hire individuals who combine strong technology skills with business savvy. However, there are some interesting trends in the evolution of analytics that may lead organizations to reassess the pressing need to hire individual data scientists and instead focus on either cultivating domain experts who are analytics-savvy or on crafting teams that can effectively use modern analytics to address organizational challenges and drive results. A Fortune magazine article acknowledges the hot market for data scientists but cautions that the combination of increasing data science training programs and analytics automation may soon begin to dampen demand. Let’s look briefly at the evolution of analytics across three broad generations to illustrate the changing value of expertise, and what the government should be looking for today.

Gen 1.0 can be described as human-driven analytics. These analytics are generally characterized by small data and batch processing. The data and analytics inputs and outputs are intuitive for humans to understand and manipulate. Many experts exist who combine both deep domain expertise and the ability to perform the analytics using standard tools such as Excel, statistical software, or geospatial packages. Gen 1.0 solutions have been delivering value to organizations for many decades, leveraging a combination of skills from in-house experts and analysts combined with off the shelf tools. Example solutions in this generation include:

The rise in the demand for data scientists has coincided with Gen 2.0 that can be described as data-driven analytics. Gen 2.0 can be defined by big data and machine learning with rapid technology advancement that is enabled by the cloud. Example Gen 2.0 solutions include:

The scope and scale of analytics in this generation have begun to challenge what is readily understandable by human intuition. Most importantly, domain and technology skills have started to diverge. It is no longer relatively easy for a single individual to be an expert in a domain, identify a problem, and deliver the analytics needed to solve it in a ubiquitous tool like Excel. With the rapid evolution of analytics technology, it is becoming increasingly difficult for individuals to maintain deep and relevant expertise in both a specific domain and in the tools and techniques required to deliver modern analytics solutions. Therefore, Gen 2.0 has placed an increasing emphasis on the need for data scientists with skills to manipulate data and develop custom algorithms in a quest to unlock hidden value. This quest for data scientists can be viewed in part as a desire to recombine the diverging domain and analytics skillsets back into individuals but this time with a greater emphasis on the analytics skills than domain expertise.

While Gen 2.0 has proven disruptive with rapidly evolving technologies and the promise of using big data analytics to extract untapped value from the deluge of data, the transition to Gen 3.0 is already beginning in many sectors. Gen 3.0 can be described as machine-driven analytics that involve machine-to-machine communication and advanced artificial intelligence. Examples include:

One benefit of the era of Gen 3.0 analytics will be that the analytics technologies and algorithms will be increasingly commoditized, requiring less custom development and bringing more widespread availability of advanced capabilities. The use of advanced analytics will be more about connecting to and applying the right tool from a machine learning or artificial intelligence library or selecting the proper algorithm for the specific problem, rather than the need to originally develop and code new analytics techniques. Gen 3.0 should expand the ability of federal agencies and other organizations to use complex analytics and make far better use of the mountains of data the government collects and maintains.

The challenge with Gen 3.0 is that while the analytics will become increasingly powerful and more widely available, they will also become harder to intuitively understand. The technologies will take in user requests and deliver answers based on advanced artificial intelligence and machine learning, but the process of getting from the question to the answer will increasingly occur in a black box that obscures the stepwise logic from detailed inspection. The risk in putting faith in the outputs of black box algorithms is the potential for unintended consequences when the algorithms are wrong. In discussing the limits to artificial intelligence and machine learning technologies, Arati Prabhakar, Director of the Defense Advanced Research Projects Agency (DARPA) warns that when artificial intelligence and machine learning algorithms are wrong they can be wrong in ways that no human would ever be.

As DARPA regularly investigates technology that is decades ahead of what is broadly available in private or public applications, the warning should give all organizations substantial pause in their quest to hire teams of data scientists and unleash them on their datasets to extract value. As we enter the era of Gen 3.0 analytics, there will be increasing requirements for domain experts who are fluent in the capabilities, limitations, and appropriate application of emerging technologies. The experts will be asked to select the right analytics approach for the specific mission challenge, help train the analytics algorithms to be properly applied for that challenge, and validate that the analytics outputs are reasonable. They will also need to assess other, non-data constraints faced in recommending actions to achieve desired outcomes including politics, physical infrastructure, social systems, and cultural norms. While technical experts can help integrate and deploy selected solutions, it will be imperative that domain experts are sufficiently fluent in the capabilities and limitations of emerging solutions to know what approach is most appropriate and understand the risks of algorithms making the wrong decisions.

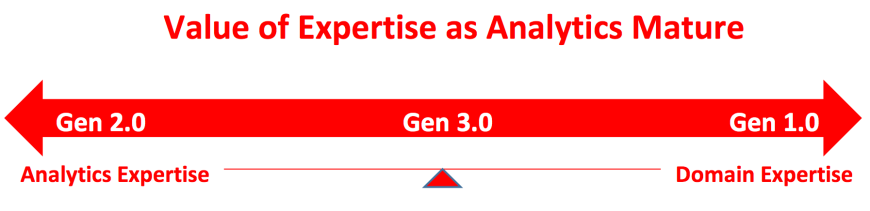

Let’s consider a spectrum of skills with very strong technology and analytics expertise on the left and deep domain expertise on the right. In Gen 1.0 analytics, the greatest value was achieved from those employees who were strong domain experts and had sufficient technology skills to deliver relevant analytics themselves. However, those analytics skills were relatively straightforward for domain experts to acquire. The tools were simpler and their capabilities were rudimentary. As evidenced with Gen 2.0 analytics and the rush to hire data scientists, the pendulum swung sharply to the left as technical skills to wrangle and exploit rapidly evolving data and technology capabilities were at a premium, and far more difficult for domain experts to acquire. As we head into Gen 3.0, expect the pendulum to swing back towards the center as data and analytics capabilities become commoditized and domain expertise is increasingly required to correctly identify and apply readily available solutions. We are early enough in the development of Gen 3.0 analytics that the government can adjust its hiring and contracting priorities to develop individuals and teams with deep domain expertise and emerging technology fluency, and use their skills to effectively navigate and extract value from the rapidly evolving Gen 3.0 analytics landscape.

Michael “Whit” Whitaker is vice president of emerging solutions for ICF International. He was co-founder of Symbiotic Engineering, and specializes in use of advanced data analytics to deliver actionable intelligence. Dr. Whitaker has a Ph.D. in Civil Engineering from the University of Colorado–Denver and an M.S. and a B.S. in Civil and Environmental Engineering from Stanford University.

Jeff Neal is a senior vice president for ICF International and founder of the blog, ChiefHRO.com. Before coming to ICF, Neal was the chief human capital officer at the Department of Homeland Security and the chief human resources officer at the Defense Logistics Agency.

Copyright © 2024 Federal News Network. All rights reserved. This website is not intended for users located within the European Economic Area.