XAI: Explainable artificial intelligence

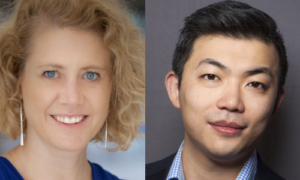

Claire Walsh, vice president of Engineering, and Henry Jia, Data Science lead, at Excella join host John Gilroy on this week's Federal Tech Talk to discuss the ...

Best listening experience is on Chrome, Firefox or Safari. Subscribe to Fed Tech Talk’s audio interviews on Apple Podcasts or PodcastOne.

Artificial intelligence is being added to many aspects of federal information technology programs.

Claire Walsh, vice president of Engineering, and Henry Jia, Data Science lead at Excella, joined host John Gilroy on this week’s Federal Tech Talk to unpack some of the challenges in using AI, how AI is implemented, and what suggestions NIST has for taking advantage of AI.

Walsh began the discussion with a confusing acronym — XAI. While “AI” stands for artificial intelligence and UX is user interface, the “X” in this abbreviation stands for “eXplainable,” in other words, “explainable artificial intelligence.”

Most of today’s artificial intelligence comes out of a black box and users can’t examine how a conclusion was derived. Much like your high school math teacher, XAI strives to show users how the answer was accomplished.

Jia has an extensive background in higher mathematics, and he looks at AI from the practical perspective of transparency. Once he can make a finding, he wants to have the ability to examine the validity of the data being used as well as having a system that is subject to human analysis.

The interview covered a wide range of topics ranging from ethics of AI to bias in data sets.

Copyright © 2024 Federal News Network. All rights reserved. This website is not intended for users located within the European Economic Area.